In the realm of machine learning, there are few techniques as foundational as Cross-validation and the k-nearest neighbors (kNN) algorithm. These methods not only refine our predictions but ensure they’re robust and generalizable.

In this article:

- What is k-Nearest Neighbors (kNN)

- Deciphering Distance

- Cross-validation – Ensuring Robustness

- Common Pitfalls

- Concluding Thoughts

What is k-Nearest Neighbors (kNN)

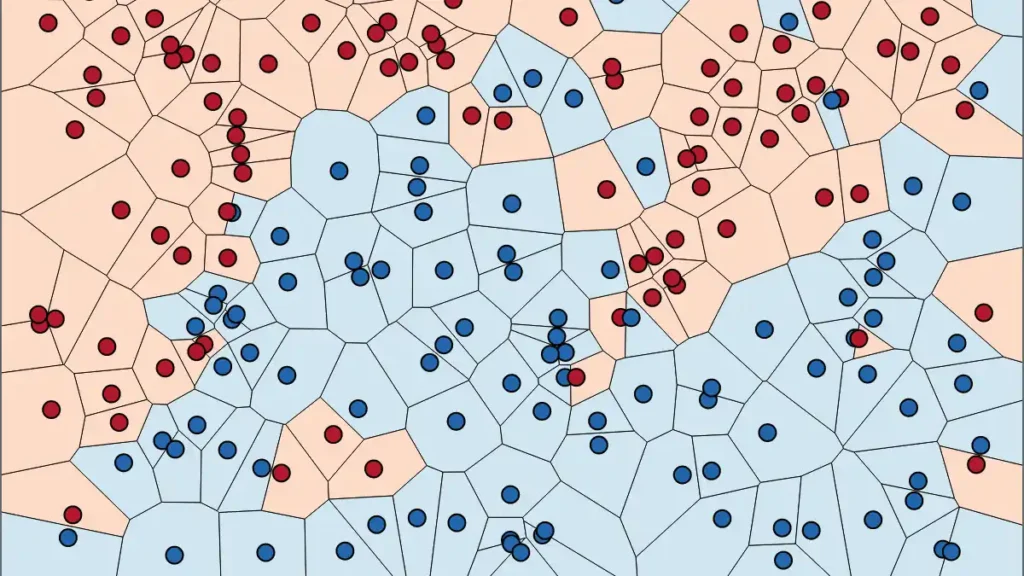

Often, the simplest ideas are the most profound. The kNN algorithm, rooted in the idea of similarity, exemplifies this.

1. Underlying Principle

At its core, kNN operates on a singular concept: things that are alike will have similar characteristics. If you’ve ever guessed the genre of a song by comparing it to others you know, you’ve employed a kNN-like strategy.

2. Choice of ‘k’

The number of neighbors, ‘k’, is a hyperparameter that can significantly impact your model’s performance. A smaller ‘k’ can capture noise, whereas a larger ‘k’ might smooth out some of the finer details. Tools like the Elbow Method can help determine an optimal ‘k’.

3. Weighted Voting

Not all neighbors are created equal. Sometimes, it’s beneficial to give more weight to closer neighbors. For instance, using inverse distance weighting ensures that nearer points have a more significant say in the outcome.

4. Applications

From recommending movies on streaming platforms to classifying handwritten digits, kNN’s versatility shines across various domains.

» To read next: What is the SNMP Protocol?

Deciphering Distance

The heart of kNN is determining the “distance” or “difference” between data points.

1. Euclidean

Think of it as the straight-line distance between two points in space. Suitable for continuous data.

2. Manhattan

This is the distance between two points measured along axes at right angles—akin to navigating a grid-based city like New York.

3. Minkowski

A generalized distance metric, which becomes Manhattan or Euclidean based on a parameter.

Cross-validation – Ensuring Robustness

No matter how good your model’s training performance is, if it fails on unseen data, it’s of limited use. Enter Cross-validation.

1. Why Not Just Split Once?

A single training-test split can be deceiving. Your model might perform exceptionally well due to a lucky split. Cross-validation averages performance over multiple splits, offering a more holistic view.

2. Stratified k-Fold

In datasets where certain categories are underrepresented, stratified k-fold ensures that each fold retains the same percentage for each class, leading to more balanced training and validation.

3. Time Series Data

Traditional cross-validation can be problematic for time-dependent data. Techniques like TimeSeriesSplit offer solutions, ensuring that validation sets always succeed training sets.

» You should also read The History of Fiber-Optics!

Common Pitfalls

1. Overfitting in kNN

If ‘k’ is too low, your model might be overly sensitive to noise in the data.

2. Computational Intensity

kNN requires storing the entire dataset, making predictions computationally intensive for large datasets.

3. Normalization

Given the reliance on distances, features on larger scales can unduly influence kNN. Always normalize your data.

Concluding Thoughts

Marrying the intuitive might of kNN with the validation robustness of cross-validation creates a potent combination for data scientists. While they’re foundational, mastering these techniques sets the stage for more advanced pursuits in the machine-learning landscape. As with any tool, understanding when and how to deploy them is crucial.

Armed with this knowledge, one is well on their way to becoming a proficient machine learning practitioner.