Welcome to an intriguing journey through Solomonoff’s Theory of Inductive Inference! The goal of this article is to shed light on the arcane yet highly influential theory that laid the foundations of modern machine learning. A theory born out of mathematical elegance, computer science rigor, and a dash of philosophical zest. You’ll leave not just with an understanding of what Solomonoff’s Theory is but with the curiosity to see where it could lead us next in the vast landscape of artificial intelligence. Let’s delve in!

In this article:

- What is Inductive Inference?

- The Man Behind the Theory

- Solomonoff’s Formalism

- Bayesian Connection

- Occam’s Razor Revisited

- Applications in Machine Learning

- Challenges and Criticisms

- Conclusion

- Bibliography

What is Inductive Inference?

Two words that sound like they belong in a philosophy seminar rather than a discussion on artificial intelligence. Yet, understanding this concept is key to unlocking the power and potential of machine learning and AI systems. So, what exactly is inductive inference, and why should you, dear reader, care about it?

Inductive inference is, at its core, the process of making generalized predictions or drawing conclusions based on a set of specific observations. Imagine you’re a detective with only a few clues: some footprints, a strand of hair, and a handwritten note. From these limited pieces of evidence, you try to solve a whole crime—identifying who did it, how, and why. That’s induction in action! You’re using specific details to make broader generalizations.

Learn from specifics to make informed generalities

In the realm of artificial intelligence, inductive inference serves as the linchpin for machine learning algorithms to make sense of data. Take a simple example like email filtering. Your email service collects data on emails you’ve marked as ‘spam’ or ‘not spam.’ Using inductive inference, it can generalize from this small dataset to identify which future emails should be classified as spam. The stakes might be higher in other applications, like autonomous driving or medical diagnosis, but the fundamental principle remains the same: Learn from specifics to make informed generalities.

Why is it important?

Now, you may ask, why is this important in AI? Well, artificial intelligence systems often deal with incomplete or limited data. By applying principles of inductive inference, these systems can make educated guesses or predictions about unknowns, thereby improving their performance over time. It’s like teaching a computer to become a better detective with each new case it solves.

Probabilistic nature of inductive inference

Inductive inference is also intrinsically tied to probability. Most often, inductive reasoning doesn’t yield absolute certainties but probabilistic outcomes. For instance, given that it rained for the past three days, there’s a high probability that it will rain tomorrow, but it’s not a guarantee. This probabilistic nature makes inductive inference a flexible and robust framework for tackling a variety of real-world problems.

In summary, inductive inference is not just a lofty theoretical concept but a practical, essential tool in the AI toolbox. It’s the mechanism that allows machines to learn from data, adapt to new information, and make decisions in uncertain environments. As we dive deeper into Solomonoff’s theory, you’ll see how this fundamental idea takes a sophisticated, mathematical form that has been foundational for modern machine learning techniques.

The Man Behind the Theory

Ah, Ray Solomonoff—perhaps not a household name like Alan Turing or Elon Musk, but make no mistake, this man’s intellectual contributions have been nothing short of transformative for artificial intelligence. Born in 1926 in Ohio, Solomonoff was a polymath who dabbled in physics, math, computer science, and yes, even philosophy. He was a thinker whose eyes were set on the horizon, always in search of the next big idea.

Solomonoff’s motivations were deeply rooted in understanding the nature of intelligence—both human and artificial. In a time when AI was more science fiction than science fact, he was busy laying down the mathematical foundations for what would later become known as machine learning. His eureka moment led him to introduce the concept of algorithmic probability, a term that would make statisticians and computer scientists sit up and pay attention.

The essence of Solomonoff’s work revolved around the question, “How can we make machines learn in a way that’s both theoretically sound and practically efficient?” While other scientists were focused on the hardware—the ‘body’ of AI, if you will—Solomonoff was one of the pioneers diving deep into its ‘mind.’ His work has been cited in countless papers, shaping AI research for decades to come. In short, Solomonoff wasn’t just a theorist; he was a visionary.

Solomonoff’s Formalism

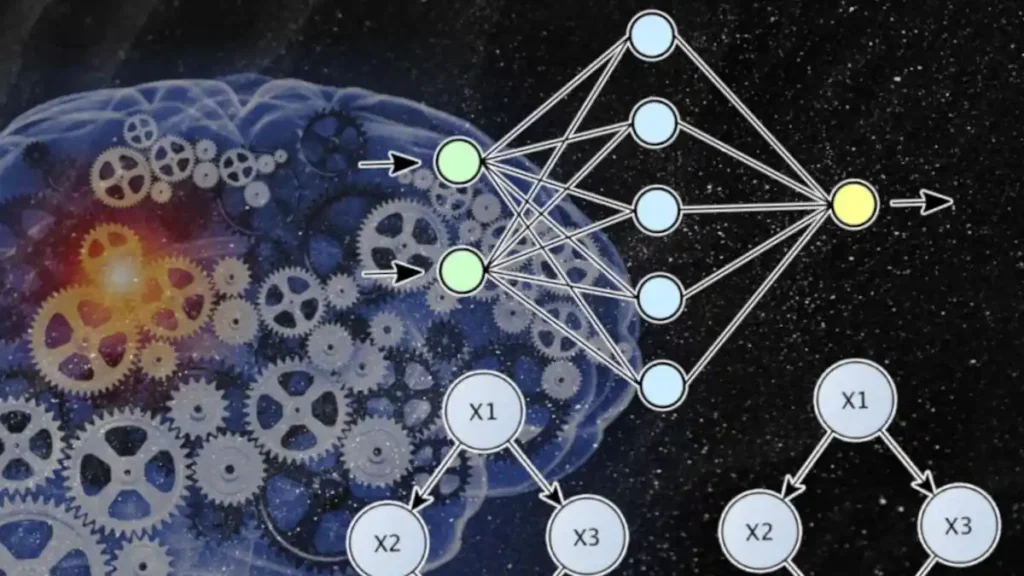

Time to roll up our sleeves and dig into Solomonoff’s formalism—his monumental mathematical framework for inductive inference. If inductive inference is the beating heart of AI, then Solomonoff’s formalism is the vascular system that allows it to flow and function effectively. At the crux of this formalism are two key concepts: algorithmic probability and universal Turing machines.

Algorithmic Probability

Imagine you have a string of binary data, let’s say “101010.” How can you predict the next bit in the sequence? Solomonoff’s answer to this question came in the form of algorithmic probability. It’s a way to assign probabilities to future outcomes based on past data while taking into account all possible algorithms that can generate that data. But it’s not just about predicting the next bit in a binary sequence; algorithmic probability provides a universal framework to quantify the likelihood of any event given prior data. Mind-blowing, isn’t it?

Universal Turing Machines

Solomonoff ingeniously combined algorithmic probability with the concept of a universal Turing machine—a theoretical device that can simulate any other Turing machine. The idea is to use the universal Turing machine as a foundation for computing these probabilities, making the approach as general as possible. Essentially, he created a ‘one-size-fits-all’ mathematical model capable of learning from data.

The synergy between algorithmic probability and universal Turing machines forms the backbone of Solomonoff’s formalism. It provides a rigorous, mathematically sound approach to inductive inference, transcending disciplines and applications. This formalism can be viewed as a theoretical ideal, a mathematical North Star that guides the design and understanding of practical machine learning algorithms.

In essence, Solomonoff’s formalism isn’t just a mathematical curiosity; it’s a compass for navigating the complex world of artificial intelligence, from natural language processing to robotic control systems.

Bayesian Connection

So, you’ve heard of Bayesian Inference, right? Of course you have! It’s the statistical darling of the 21st century, showing up everywhere from Wall Street algorithms to medical diagnoses. But here’s the kicker: Solomonoff’s Theory and Bayesian Inference are like long-lost siblings in the realm of AI and machine learning. How so? Let’s dig in.

At its heart, Bayesian Inference is about updating our beliefs based on new evidence. You start with a prior belief, collect some data, and voila, your belief is updated. Simple, yet powerful. Now, Solomonoff took this a step further. He didn’t just update beliefs; he provided a systematic way to calculate these updates using algorithmic probability.

Essentially, Solomonoff’s formalism gives us the mathematical tools to perform Bayesian updates rigorously. Imagine having a Swiss Army knife for tackling the most intricate prediction problems, from predicting stock market trends to diagnosing diseases. That’s what the melding of Solomonoff and Bayesian methods gives us—an all-in-one tool for inductive inference.

The broader implication? Well, it turns out this Bayesian-Solomonoff fusion offers a universal framework for learning and prediction. It’s like having a golden key that can unlock the deepest secrets hidden in any dataset. Seriously, the possibilities are limitless!

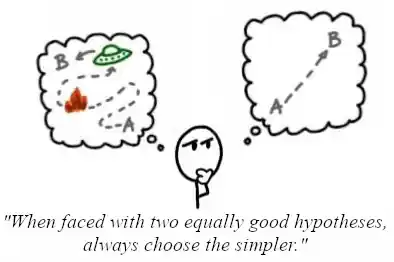

Occam’s Razor Revisited

Alright, let’s shift gears and talk about Occam’s Razor. You know, the principle that advises us to opt for the simplest explanation that fits the facts. In essence, Occam’s Razor tells us not to overcomplicate things. But here’s where it gets exciting: Solomonoff’s Theory actually provides a mathematical backbone for this age-old principle.

So, how does it all tie in? Remember algorithmic probability? Well, Solomonoff ingeniously formulated it to favor simpler algorithms over more complex ones when making predictions. In other words, his theory mathematically enforces the principle of Occam’s Razor. Instead of just saying “keep it simple,” Solomonoff gives us the numbers to prove why simpler is indeed often better.

This is no trivial feat. In AI, the temptation to go for complex, intricate models is ever-present. We often think more is better, but Solomonoff reminds us to take a step back. By applying mathematical rigor to the principle of simplicity, his theory serves as a sanity check for the field of AI. It reminds us that the best solutions are often the most elegant ones.

Applications in Machine Learning

By now, you’re probably wondering, “This Solomonoff guy sounds brilliant, but how do his ideas actually affect modern machine learning?” Excellent question! Solomonoff’s theory, while mathematically intensive, has indeed trickled down to influence practical techniques that machine learning engineers employ daily.

Take, for instance, Random Forests. These are ensembles of decision trees designed to improve predictive performance. Well, guess what? The Bayesian foundation laid by Solomonoff helps in both tree pruning and data splitting. Essentially, his work guides these algorithms to make smarter decisions.

And let’s not forget about Neural Networks. Solomonoff’s formalism subtly encourages the design of more efficient network architectures. By aligning closely with the principle of Occam’s Razor, algorithm designers are nudged to prioritize simplicity and efficiency—core tenets of effective machine learning.

Simply put, the fingerprints of Solomonoff’s Theory are all over machine learning. Whether it’s boosting algorithms or recommendation engines, his mathematical groundwork has become a North Star guiding the field. So yes, Solomonoff isn’t just a theoretical virtuoso; his legacy lives on in the very algorithms that power your favorite apps!

Challenges and Criticisms

Alright, let’s be fair and balanced. No theory is perfect, and Solomonoff’s is no exception. Critics often point out that while the theory is mathematically elegant, it’s computationally intensive. In plain speak, it can be a real resource hog. For large datasets, the calculations become so unwieldy that even supercomputers would raise a white flag.

Another sticking point? Solomonoff’s formalism is often considered “uncomputable” in a strict sense. That means that while it offers a theoretically ideal way to approach inductive inference, applying it directly to real-world problems isn’t always feasible. In essence, it serves more as a conceptual guide than a practical toolkit.

Moreover, the theory doesn’t directly address the ethical or societal implications of AI, an increasingly important aspect of modern machine learning. Solomonoff gives us the how, but he doesn’t necessarily delve into the should.

Despite these challenges, the debates around Solomonoff’s Theory are precisely what make it so compelling. It remains a hot topic of discussion, sparking impassioned debates that push the boundaries of what we understand about intelligence, both artificial and natural.

Conclusion

So here we are, friends, at the end of our deep dive into Solomonoff’s Theory of Inductive Inference and its expansive impact on AI, machine learning, and beyond. It’s been a wild ride, hasn’t it? We’ve journeyed from the mathematical subtleties of Solomonoff’s formalism to its philosophical underpinnings, its synergies with Bayesian thinking, and even its real-world applications and criticisms.

Let’s not lose sight of the big picture, though. Solomonoff’s Theory is more than just equations and algorithms; it’s a framework that challenges us to think deeply about the way we approach problems and make predictions. While it has its limitations, the theory continues to be a touchstone that propels forward-thinking in AI. Just as the man himself was a visionary, his work challenges us to be visionaries in our own right.

In a world that’s increasingly data-driven, staying informed about the theoretical foundations of machine learning isn’t just optional—it’s essential. We should all be excited that we’re here, right now, to witness and partake in this incredible journey of scientific discovery and technological innovation.

Bibliography

Ready to plunge deeper into this intellectual rabbit hole? Here are some must-reads that resonate with the theories we’ve dissected today:

- “The Master Algorithm” by Pedro Domingos – A compelling overview of machine learning theories, including the role of Solomonoff’s and Bayesian thinking.

- “Artificial Intelligence: Foundations of Computational Agents” by David L. Poole and Alan K. Mackworth – This book provides a comprehensive look at AI and delves into inductive inference, among other things.

- “Bayesian Reasoning and Machine Learning” by David Barber – A textbook that focuses on Bayesian methods and their role in modern machine learning.

- “The Elements of Statistical Learning” by Trevor Hastie, Robert Tibshirani, and Jerome Friedman – A cornerstone text for anyone in machine learning, it discusses various algorithms and principles, including Occam’s Razor.

- “I Am a Strange Loop” by Douglas Hofstadter – While not directly about Solomonoff, this Pulitzer-winning book tackles the complexities of systems and intelligence, offering perspectives that complement Solomonoff’s theories.

That’s it for now, dear reader. But remember, the beauty of science is that it’s ever-evolving. Stay curious, stay hungry, and keep turning those gears in that brilliant mind of yours!