In this article, we’re going to explore the basics of Quantum Computing and understand its limitations. Let’s also take a closer look at Qubits, the foundation of Quantum Computers. Step into a new era of network technology revolution.

In this article

- Introduction

- Understanding Quantum Computing Basics and its Limitations

- A Closer Look at Qubits: The Foundation of Quantum Computing

- Practical Implications of Quantum Computing

- Conclusion

- Bibliography

Introduction

The realm of Quantum Computing can seem like an intimidating jungle of complex concepts, particularly if you’re just beginning your journey. This article, focusing on Quantum Computing Basics, is your map through this intriguing world. We’ll guide you through the network of quantum theories, making them digestible and engaging, thereby bringing you closer to this awe-inspiring technology.

As we delve into the evolving landscape of network technologies, Quantum Computing emerges as a game-changer, promising significant advancements and innovations. However, it’s crucial to consider its limitations as we evaluate its future role. This technology might revolutionize our network infrastructures, but the journey to get there is filled with challenges that we will also discuss in this guide.

Understanding Quantum Computing Basics and its Limitations

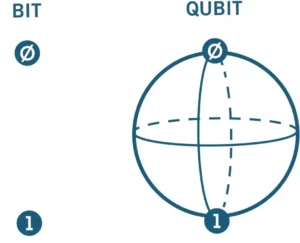

Quantum Computing is a computational approach that uses principles from quantum mechanics, the fundamental theory in physics that describes nature at the scale of atoms and subatomic particles. Where traditional computers operate on ‘bits’ of information, which are either 0s or 1s, Quantum Computers work with ‘qubits’. Uniquely, qubits can exist in a state of superposition, meaning they can be both 0 and 1 simultaneously, giving quantum computers the potential to process vast amounts of information.

While the superposition and entanglement of qubits allow quantum computers to perform complex calculations and process large datasets rapidly, they also present limitations. Quantum systems are incredibly sensitive to external interference, a problem known as quantum decoherence, which can cause computational errors. Moreover, the current stage of quantum technology is still not as advanced, and creating a practical, large-scale quantum computer remains a daunting challenge.

Take a look at our previous article about this topic: Starting to understand Quantum Computing!

A Closer Look at Qubits: The Foundation of Quantum Computing

At the heart of quantum computing lies a unique and mystifying entity—the qubit, or quantum bit. This quantum computing basic, though profoundly more complex than its classical counterpart, the bit, serves as the bedrock upon which quantum computers are built. The remarkable properties of qubits provide the foundation for the transformative potential of quantum computing but also contribute to some of its most pressing limitations.

In classical computing, bits represent the smallest unit of data, restricted to a binary state: 0 or 1. The crux of a quantum bit’s novelty and power resides in its capacity to transcend this binary restriction, thanks to a quantum mechanical property known as superposition. Superposition allows qubits to exist in a state that is a combination of both 0 and 1 simultaneously. This doesn’t simply mean that a qubit is sometimes a 0 and sometimes a 1. Rather, a qubit’s state can be a complex mathematical combination of both 0 and 1 states at the same time, until it’s measured.

While the concept of superposition is the first leap in understanding the quantum computing world, it’s important not to overlook a qubit’s second quantum trait: entanglement. Entanglement is a peculiar quantum phenomenon wherein pairs or groups of particles interact in ways such that the state of one particle cannot be described independently of the state of the other particles. This means that the information state of one qubit, once entangled with another, instantly affects the other, no matter how far apart they are. This property is a powerful asset to quantum computing, enabling it to process a vast amount of data in parallel, achieving computational feats far beyond the scope of classical computers.

Quantum decoherence

These unique characteristics provide quantum computers with their extraordinary potential, but they also pose significant challenges. One of these challenges is quantum decoherence. Quantum systems are extremely sensitive to their environment, and any interaction with the external world can disturb their superposition, causing the qubits to lose their quantum behavior and ‘decohere’ into ordinary bits. This is a critical limitation, as it can lead to computational errors and necessitates maintaining quantum computers at extremely low temperatures to limit such disturbances.

Preserving the quantum state

Moreover, controlling qubits and performing computations while preserving their quantum states is an extraordinary technological challenge. Qubits require precise tuning and manipulation to perform complex calculations, and the difficulty of this task increases exponentially with the number of qubits. Each additional qubit increases the computational power of a quantum computer, but it also makes the system more prone to errors and harder to control.

To harness the full power of quantum computing, scientists and engineers need to overcome these hurdles. Innovations in qubit design, error correction techniques, and advancements in quantum algorithms all play crucial roles in the ongoing evolution of quantum computing technology.

In essence, qubits represent the confluence of promise and challenge in quantum computing. Their unique properties are what make quantum computers so potentially powerful, but they also contribute to the greatest obstacles in developing practical, large-scale quantum computers. As we continue to explore the quantum computing basics, it’s essential to remember that the quest for quantum supremacy is as much about conquering these challenges as it is about leveraging the power of qubits.

Practical Implications of Quantum Computing

While the theoretical concepts underlying quantum computing can be quite abstract, the practical implications of this technology are far-reaching. Quantum computers have the potential to revolutionize many fields, from cryptography, where quantum algorithms could render many current systems obsolete, to complex problem-solving in logistics, finance, and drug discovery. However, it’s important to bear in mind that we are in the early days of this technology, and practical, large-scale quantum computing is still a vision for the future.

» Check out our article about The Weasel Effect – How solving a problem can create a bigger one.

Conclusion

This exploration of Quantum Computing Basics has taken us on a journey from the fundamental concepts that define this field, to the fascinating and complex world of qubits, and finally to the practical implications and limitations of this emerging technology. As we stand on the brink of what many are calling the second quantum revolution, it’s clear that the world of quantum computing is not just reserved for physicists or computer scientists. It’s a burgeoning field that promises to reshape our world in ways we’re just beginning to imagine.

As we continue to navigate the vast and complex landscape of network technologies, quantum computing represents a new frontier full of potential and challenges. While the full manifestation of this technology’s promise may still be years or even decades away, understanding the basics of quantum computing is a crucial step toward preparing for, and shaping, the future of network technology. Thank you for embarking on this journey into the quantum realm with us.

Bibliography (Further Reading)

Here are several books that break down the complex concepts of quantum computing into digestible information, making them accessible to readers with little to no background in the subject:

- “Quantum Computing for Everyone” by Chris Bernhardt: This book offers a gentle introduction to the world of quantum computing, breaking down complex principles into engaging and digestible lessons.

- “Quantum Computing: An Applied Approach” by Jack D. Hidary: Hidary’s work is lauded for its practical approach and is considered a great starting point for those interested in how quantum computing can be applied to real-world problems.

- “Programming Quantum Computers: Essential Algorithms and Code Samples” by Eric R. Johnston, Nic Harrigan, and Mercedes Gimeno-Segovia: This is an excellent choice for those with some background in computer science or programming who are interested in exploring the intricacies of quantum algorithms and coding.

- “Quantum Computing for Computer Scientists” by Noson S. Yanofsky and Mirco A. Mannucci: While a bit more technical, this book provides an introduction to quantum computing in the context of classical computer science.

- “Quantum Computation and Quantum Information” by Michael A. Nielsen and Isaac L. Chuang: Often referred to as the ‘bible’ of quantum computing, this book is more advanced but provides a thorough exploration of both quantum computing and quantum information theory.

- “In Search of Schroedinger’s Cat: Quantum Physics and Reality” by John Gribbin: While not exclusively about quantum computing, this book is an engaging and approachable introduction to quantum mechanics, the scientific foundation of quantum computing.

Remember, the best book for you will depend on your prior knowledge and learning style. So, it might be helpful to read reviews or preview chapters to see which one suits you best.