In the realm of computing and digital communication, the terms “bits” and “bytes” are frequently used to quantify data. Understanding the relationship between these two units is fundamental to comprehending data measurement. In this article, we will explore the concept of bits and bytes, shedding light on their definitions and the relationship between them. By the end, you’ll have a clear understanding of how these units are used to measure data.

Defining Bits and Bytes

Bits Definition

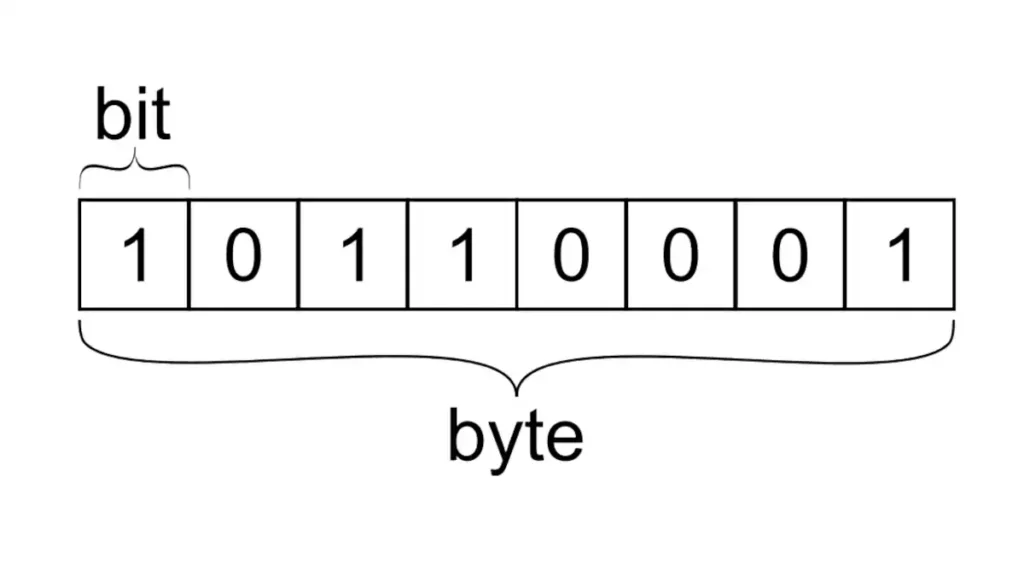

A bit, short for “binary digit,” is the most fundamental unit of digital information. It represents the smallest building block of data, capable of storing a single value, either a 0 or a 1.

Bits are the foundation of all digital communication and computation, serving as the basic currency for representing and manipulating information in computers and networks.

Bytes Definition

A byte is a higher-level unit of data storage and communication. It consists of a group of 8 bits, forming a larger unit that represents a single character or numerical value.

Bytes provide a more practical measure for expressing data quantities, as they align with the structure and encoding of characters in computer systems.

The Relationship between Bits and Bytes

Conversion

Bits to Bytes: To convert bits to bytes, divide the number of bits by 8. For example, 24 bits equate to 3 bytes (24 bits ÷ 8 = 3 bytes).

Bytes to Bits: To convert bytes to bits, multiply the number of bytes by 8. For instance, 5 bytes correspond to 40 bits (5 bytes × 8 = 40 bits).

Data Representation:

Bits: Bits enable the representation of discrete binary information, allowing for precise encoding and manipulation of data.

Bytes: Bytes provide a more human-readable and practical representation of data, aligning with character encoding schemes like ASCII or Unicode.

Application in Data Measurement

Data Storage:

Bits: Bits are commonly used to measure small data quantities or to express the speed of data transmission and processing.

Bytes: Bytes are primarily employed to quantify larger data sets, such as file sizes or memory capacities. They are more intuitive for users and align with common storage devices like hard drives and flash memory.

Network Speed and Bandwidth:

Bits: Network speed and bandwidth are typically measured in bits per second (bps) or their derivatives (Kbps, Mbps, Gbps). These units reflect the rate at which data is transmitted or received over a network.

Bytes: Occasionally, network speed may also be expressed in bytes per second (Bps) to indicate the actual volume of data transferred.

Origin of bit and Byte

The term “bit” is a portmanteau of “binary digit.” It was first coined by the mathematician and engineer Claude Shannon in a 1948 paper titled “A Mathematical Theory of Communication,” which laid the groundwork for modern digital communications and information theory.

The term “byte,” meanwhile, was first used to describe an 8-bit grouping in a 1956 document during the development of the IBM 7030 Stretch computer. The term was coined by Dr. Werner Buchholz. The need for a term to represent a group of binary digits arose during the design phase of the IBM Stretch computer. Originally, there was no standard number of bits in a byte, but the term became synonymous with 8 bits when standardized by the design of the System/360 in the mid-1960s.

Summary

In summary, bits and bytes are fundamental units of data measurement in computing and digital communication. While a bit represents the smallest unit of information, a byte encompasses a group of 8 bits, providing a more practical measure for data representation and quantification. Understanding the relationship between bits and bytes allows for accurate data measurement, storage capacity estimation, and network speed evaluation.

Mastering the distinction between bits and bytes is crucial for navigating the complexities of modern technology. Whether you’re assessing network performance, estimating storage requirements, or simply exploring the world of digital information, a solid understanding of bits and bytes empowers you to comprehend and engage with the vast landscape of data in our digital age.

How many bytes in a bit?

Is there an answer to the question “How many bytes in a bit? Yes, there is!

The relationship between bits and bytes is defined by a conversion factor.

A bit is the smallest unit of digital information and represents a binary value, either 0 or 1. On the other hand, a byte is a higher-level unit of data storage and communication, consisting of a group of 8 bits.

Therefore, the logical answer to the question is that there are 1/8 (0.125) bytes in a single bit. In other words, it takes 8 bits to form 1 byte.