HTTP/2 multiplexing represents a significant advancement in web communication protocols, addressing the limitations of its predecessor, HTTP/1.1. By allowing multiple requests and responses to be sent simultaneously over a single TCP connection, HTTP/2 multiplexing dramatically improves web performance, reducing latency and optimizing resource utilization. This article delves into the mechanics of HTTP/2 multiplexing, its benefits, and the impact it has on the modern web.

Index

- What is HTTP/2 Multiplexing?

- The Evolution from HTTP/1.1 to HTTP/2

- How HTTP/2 Multiplexing Works

- Implementing HTTP/2 Multiplexing

- References

1. What is HTTP/2 Multiplexing?

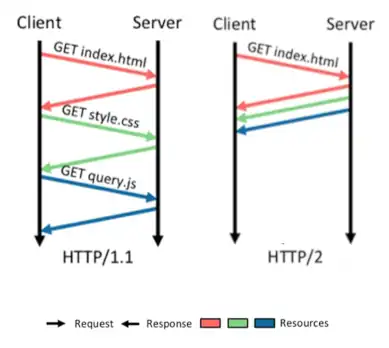

HTTP/2 multiplexing is a transformative feature of the HTTP/2 protocol, designed to optimize the way web communication occurs over a single TCP connection. Traditional HTTP/1.1 protocol necessitated separate TCP connections for each request/response cycle, leading to inefficiencies such as increased latency and resource consumption. This was further exacerbated by the issue of head-of-line blocking, where the processing of subsequent requests could be delayed by the first request in line.

HTTP/2 multiplexing addresses these challenges by allowing multiple requests and responses to be interleaved and transmitted concurrently over a single TCP connection. This is achieved through a sophisticated system of streams, frames, and prioritization, enabling the browser and server to exchange information in a more efficient and organized manner.

2. The Evolution from HTTP/1.1 to HTTP/2

The evolution from HTTP/1.1 to HTTP/2 represents a significant leap forward in web protocol design, directly addressing the limitations of the earlier version. HTTP/1.1, introduced in 1997, laid the groundwork for the modern web but struggled with performance issues such as head-of-line blocking and the inefficiency of opening multiple TCP connections for parallel resource delivery. These limitations hampered user experience, especially as web applications became more complex and resource-intensive.

HTTP/2, formalized in 2015, introduced several key innovations to address these issues. Multiplexing revolutionized data transmission by allowing multiple requests and responses to share a single connection. Header compression reduced overhead, and server push optimized resource delivery. Together, these features marked a new era of efficiency and speed in web communication, paving the way for more dynamic and interactive web applications.

3. How HTTP/2 Multiplexing Works

HTTP/2 multiplexing represents a pivotal innovation in the HTTP protocol’s evolution, addressing the inefficiencies of its predecessor and significantly enhancing web communication. This section delves into the mechanics of how HTTP/2 multiplexing operates, breaking down its components and providing examples to elucidate this complex yet fundamental concept.

3.1 Understanding Streams, Messages, and Frames

At the heart of HTTP/2’s efficiency is its structure, which organizes data transmission into streams, messages, and frames:

- Streams: A stream is an independent, bi-directional sequence of frames exchanged between the client and server within a single TCP connection. Each stream has a unique identifier and carries a sequence of messages, allowing multiple streams to coexist simultaneously without interfering with each other.

- Messages: A message consists of a complete sequence of frames that map to a logical HTTP message (either a request or a response). Messages can be composed of one or more frames and are associated with a specific stream.

- Frames: The frame is the smallest unit of communication in HTTP/2, carrying specific types of data, such as headers, payload, and control information. Frames are multiplexed across a single TCP connection, allowing for concurrent transmission of multiple requests and responses.

3.2 The Multiplexing Process: An Example

To illustrate how HTTP/2 multiplexing works, consider a scenario where a client requests multiple resources from a server (e.g., an HTML document, a CSS stylesheet, and a JavaScript file) to render a webpage:

- Stream Initialization: The client opens a single TCP connection to the server and initiates three separate streams, one for each resource request. Each stream is assigned a unique identifier.

- Frame Construction: For each request, the client constructs one or more frames containing the request headers and, if necessary, additional frames for any request body data. These frames are tagged with their respective stream identifiers.

- Concurrent Frame Transmission: Instead of waiting for one request to complete before starting the next, the client sends frames from all three streams over the single TCP connection simultaneously. The frames are interleaved, with pieces of each request and response being transmitted as soon as they are available.

- Server Processing: The server receives the interleaved frames and, based on their stream identifiers, reassembles them into the original messages (requests). It processes each request independently, generating response messages that are also broken down into frames.

- Response Delivery: The server sends the response frames back to the client over the same TCP connection, interleaving them to maximize efficiency. The client uses the stream identifiers to reconstruct each response from its frames.

- Stream Completion: Once all frames of a message have been transmitted and received, the corresponding stream is closed. The single TCP connection remains open for additional requests or until explicitly closed by either the client or server.

3.3 Benefits of This Approach

This multiplexed approach provides several benefits over HTTP/1.1:

- Reduced Latency: Multiple requests can be made without waiting for responses to previous ones, significantly reducing webpage loading times.

- Efficient Connection Use: A single TCP connection reduces the overhead and latency associated with opening multiple connections.

- Prioritization: HTTP/2 allows streams to be prioritized, enabling critical resources to be delivered faster, further optimizing page load times.

- Flow Control: HTTP/2 includes flow control at the stream level, allowing receivers to control the pace of data transmission to prevent overwhelm.

- Enhanced Security: HTTP/2 is often paired with TLS encryption, promoting a more secure web environment.

4. Implementing HTTP/2 Multiplexing

Adopting HTTP/2 and its multiplexing feature primarily requires server and client (browser) support. Most modern web servers and browsers already support HTTP/2, making the shift seamless for users and developers. For webmasters, enabling HTTP/2 on a web server involves configuration changes that vary by server software, but generally, the process is straightforward and well-documented.

HTTP/2 multiplexing has fundamentally changed how web content is transmitted, making the web faster, more efficient, and more reliable. By understanding and implementing this protocol feature, developers and administrators can significantly enhance the performance and user experience of web applications.

5. References

- RFC 7540 – Hypertext Transfer Protocol Version 2 (HTTP/2): This foundational document outlines the specifications of HTTP/2, including multiplexing.