In today’s digital age, a silent symphony of bytes and bits plays continuously in the background, generating an enormous wave of data with each passing second. From the mundane choices we make online to complex scientific experiments, this data amassment represents a boundless treasure trove waiting to be tapped. Data Science stands at the confluence of this digital deluge, wielding the power to convert raw data into actionable insights and transformative decisions. For budding computer engineers, understanding the vast realm of Data Science is not merely a modern necessity; it’s an invitation to shape the future, decode mysteries, and drive innovations in ways previously unimaginable. Let’s start with Data Science basics!

In this article:

- What is Data Science?

- The Pillars of Data Science

- Exploring Leading Data Science Software and Languages

- The Importance of Robust Data Infrastructure

- Algorithms at the Heart of Predictive Analysis

- How Data Science is Revolutionizing Industries

- Predicting Trends and Technological Advancements

- Carving a Niche in the Data World

- Recommended Books, Courses, and Communities

- Conclusion

The art and science of extracting meaning from vast datasets, Data Science, amalgamates diverse disciplines, tools, and techniques. Its significance in today’s world cannot be understated, especially as organizations, big and small, race to harness the potential of their data. Embarking on a journey through the depths of Data Science offers not only a promising career but also a chance to be at the forefront of technological revolutions.

What is Data Science?

Defining Data Science in Modern Context

Data Science, at its core, is the interdisciplinary field dedicated to extracting insights from complex and unstructured data. It combines an array of skills from statistics, computer science, machine learning, and domain-specific knowledge to make informed decisions, predict future trends, and unearth hidden patterns within data. In our contemporary digital age, where every click, purchase, and interaction contributes to a massive reservoir of information, Data Science acts as the alchemical process, turning this digital lead into actionable gold. Unlike traditional data analytics, which might seek to provide a reactive understanding of past events, Data Science looks proactively, aiming to predict and influence future outcomes based on past and current data trends.

The Historical Evolution: From Statistics to Data Science Basics

While the term “Data Science” might seem like a modern phenomenon, its roots stretch back centuries. The initial inklings of data analysis began with the development of statistics, a branch that flourished in the 18th century for predicting populations and understanding societal trends. Fast forward to the mid-20th century, when the advent of computers revolutionized data storage and computation.

With these technological marvels, our capacity to collect and process data grew exponentially, outpacing traditional statistical methods. The term “Data Science” was first coined in the early 1970s, but it wasn’t until the data explosion of the 21st century, fueled by the internet, smartphones, and IoT devices, that it became a distinct and pivotal field. Bridging the gap between traditional statistics, advanced computational techniques, and vast data sources, Data Science emerged as the new paradigm, reshaping industries, fueling innovations, and even redefining our societal structures.

The Pillars of Data Science

Statistics: The Backbone

The realm of statistics is vast, serving as the foundational backbone of Data Science. It is the art and science of using data to make decisions, provide insights, and validate hypotheses. When confronted with a sea of data, it’s statistical tools and techniques that empower us to discern signal from noise.

1. Descriptive Statistics

These offer a snapshot of the central tendencies (mean, median, mode) and dispersions (range, variance, standard deviation) within a dataset, giving a preliminary understanding of its distribution and structure.

2. Inferential Statistics

Moving beyond mere descriptions, inferential statistics helps in drawing conclusions and predictions about populations based on samples. Tools like hypothesis testing, confidence intervals, and chi-squared tests are pivotal here.

3. Probability Theory

Serving as the bedrock for inferential statistics, probability theory quantifies the likelihood of events. Concepts like Bayes’ theorem and probability distributions (e.g., normal, binomial, Poisson) are essential in this context.

4. Regression and Correlation

These techniques help in understanding relationships between variables. While correlation measures the strength and direction of a linear relationship, regression can model and predict outcomes based on one or more predictors.

Computer Science: The Mechanism

Where statistics provides the tools, computer science provides the machinery to carry out data-intensive tasks at scale. The volume, velocity, and variety of today’s data would be overwhelming without the power of modern computational techniques.

1. Algorithms and Data Structures

The efficiency of data processing and analysis often hinges on the use of the right algorithms (like sorting, searching) and data structures (like arrays, trees, graphs).

2. Databases

With the explosion of data, efficient storage, retrieval, and manipulation of data have become critical. Relational databases, NoSQL databases, and distributed storage solutions like Hadoop underpin this aspect.

3. Parallel and Distributed Computing

Handling large-scale data operations necessitates the use of parallel processing and distributed systems, allowing tasks to be executed concurrently, thus speeding up computations.

4. Optimization

Often, Data Science tasks involve finding the best solution from a set of possibilities, be it in machine learning model tuning or resource allocation. Optimization techniques are central to these endeavors.

Domain Expertise: The Contextual Guide

The numbers, algorithms, and computations are only as good as the real-world context they are applied in. Domain expertise ensures that the insights derived are actionable, relevant, and impactful.

Industry-Specific Knowledge

Whether it’s predicting stock market trends or diagnosing medical conditions, a deep understanding of the specific industry ensures that data analysis is aligned with real-world scenarios.

Ethical Considerations

Especially in sectors like healthcare, finance, and social research, the ethical use of data is paramount. Domain expertise offers a moral compass, ensuring data use respects privacy, consent, and societal implications.

Data Source Verification

Knowing the domain helps in understanding where the data is coming from, ensuring its quality, credibility, and relevance to the problem at hand.

Tools of the Trade

In the world of Data Science, an assortment of software and tools have emerged, aiding in data collection, storage, visualization, and analysis.

1. Programming Languages

Python and R are the titans in Data Science, offering extensive libraries and packages for data manipulation, visualization, and machine learning.

2. Data Visualization Tools

Software like Tableau, PowerBI, and libraries like Matplotlib and Seaborn help in visualizing complex data, making patterns more discernible.

3. Machine Learning Frameworks

TensorFlow, PyTorch, and Scikit-learn are pivotal for developing predictive models, neural networks, and facilitating advanced data analyses.

4. Big Data Platforms

Solutions like Apache Hadoop and Spark play a crucial role in storing and processing vast amounts of data across distributed clusters.

As we delve deeper into the world of Data Science, understanding these pillars is paramount. They not only provide the framework for extracting meaningful insights from data but also highlight the interdisciplinary nature of this field. The blend of statistics, computation, domain knowledge, and the right tools make Data Science a dynamic and ever-evolving discipline.

Exploring Leading Data Science Software and Languages

Data Science, as a field, is as much about tools as it is about theories and methodologies. The right software or programming language can significantly amplify a data scientist’s capabilities, enabling them to process vast datasets, visualize intricate patterns, and deploy complex models with relative ease. This chapter illuminates the leading software and languages that have become the cornerstone of modern data science endeavors.

Python: The Swiss Army Knife of Data Science

Python stands out as one of the most versatile and popular languages in the data science realm. Its simplicity and readability, coupled with an extensive ecosystem of libraries, make it an ideal choice for beginners and experts alike.

1. Pandas

For data manipulation and cleaning. Pandas provides data structures that make working with structured data seamless.

2. NumPy

Offers support for mathematical operations and works with arrays and matrices, forming the backbone of many other Python data science tools.

3. Scikit-learn

A comprehensive library for machine learning, offering tools for classification, regression, clustering, and more.

4. TensorFlow and PyTorch

These are deep learning frameworks, facilitating the creation of large-scale neural networks.

5. Matplotlib and Seaborn

Visualization tools that allow for the creation of detailed and informative plots.

R: The Statistician’s Workhorse

R was built with statisticians in mind, making it a powerhouse for statistical modeling and data analysis.

1. dplyr and tidyr

For data manipulation. These packages make data cleaning and transformation a breeze.

2. ggplot2

A leading visualization package that offers a deep level of customization and sophistication in plots.

3. caret

Provides a suite of tools to train and plot machine learning models.

4. Shiny

An R package that allows for the creation of interactive web applications straight from R scripts.

SQL: Navigating Relational Databases

Structured Query Language (SQL) is the standard language for storing, manipulating, and retrieving data in relational databases.

1. MySQL, PostgreSQL, and SQL Server

Popular relational database management systems that use SQL.

2. SQLite

A C-language library that implements an SQL database engine, known for being lightweight.

Big Data Platforms: Handling Data at Scale

When datasets grow vast, traditional data processing software can lag. That’s where Big Data platforms come into play.

1. Apache Hadoop

An open-source framework that allows for distributed processing of large datasets across clusters using simple programming models.

2. Apache Spark

A fast and general-purpose cluster-computing system, it provides an interface for programming entire clusters with implicit data parallelism and fault tolerance.

3. Apache Kafka

Used for building real-time data pipelines and streaming apps, it’s horizontally scalable, fault-tolerant, and incredibly fast.

Visualization and BI Tools: Making Data Speak

Visual representation of data can tell stories, uncover patterns, and inform decision-making.

1. Tableau

An interactive data visualization tool that’s user-friendly and can connect to various data sources.

2. PowerBI

Microsoft’s Business Intelligence tool, is known for its robust visualization capabilities and integration with various data sources.

3. QlikView

A Business Discovery platform that offers self-service BI for all business users.

Understanding the vast landscape of software and languages in data science is key to optimizing one’s workflow and expanding capabilities. Whether you’re delving into deep learning, managing mammoth databases, or creating insightful visualizations, equipping oneself with the right tools can make all the difference in successful data-driven ventures.

The Importance of Robust Data Infrastructure

Data infrastructure refers to the architectural framework dedicated to housing, processing, and transporting data. A well-constructed data infrastructure acts as the backbone for all data operations, supporting everything from the most mundane data storage tasks to the most intricate machine learning algorithms. This chapter underscores the criticality of having robust data infrastructure, especially in a world that’s generating more data than ever before.

The Foundation: Why Data Infrastructure Matters

In essence, data infrastructure is akin to the foundation of a building: it might not be outwardly visible, but everything stands or falls based on its strength. With businesses becoming increasingly data-driven:

1. Efficiency

A robust infrastructure ensures faster data queries, smoother processing, and optimized storage, ensuring data is available when and where it’s needed.

2. Scalability

Modern data infrastructures are designed to grow. As data volumes increase, the infrastructure can be expanded without overwhelming costs or complexity.

3. Reliability

System downtimes and data losses can be catastrophic. A strong infrastructure ensures high availability, fault tolerance, and timely backups.

4. Security

With rising cyber threats and stringent data protection regulations, a solid data infrastructure must prioritize security mechanisms to safeguard sensitive data.

Components of Modern Data Infrastructure

Modern data infrastructure isn’t just about storage. It encompasses a holistic view of data’s journey.

1. Storage Systems

From traditional relational databases like MySQL and PostgreSQL to distributed systems like Apache Cassandra or Hadoop’s HDFS.

2. Processing Engines

Tools like Apache Spark and Apache Flink allow for large-scale data processing in real-time.

3. Data Lakes and Warehouses

While data lakes store raw, unprocessed data, warehouses store cleaned, integrated, and structured data optimized for analytics.

4. Data Integration Tools

These facilitate the seamless movement of data between systems. Examples include Apache Kafka for real-time streaming and Apache NiFi for flow-based data processing.

5. Orchestration

Tools like Apache Airflow and Prefect help automate, schedule, and monitor data workflows.

The Shift to Cloud-based Data Infrastructure

The advent of cloud computing has profoundly influenced data infrastructure, offering several advantages:

1. Elasticity

Cloud platforms can scale resources up or down based on demand, ensuring optimal utilization and cost management.

2. Managed Services

Cloud providers offer managed database services, processing engines, and analytics tools, reducing the overhead of maintenance and updates.

3. Global Reach

Cloud providers have data centers across the globe, ensuring low latency and adherence to regional data regulations.

4. Innovation

Cloud providers continually roll out new services and features, ensuring businesses have access to the latest data tools and technologies.

Challenges in Building and Maintaining Data Infrastructure

While the need and benefits are clear, constructing a reliable data infrastructure is not without its challenges:

1. Integration Complexity

As tools and systems proliferate, integrating them seamlessly becomes a herculean task.

2. Cost Management

While cloud offers pay-as-you-go models, without careful management, costs can escalate quickly.

3. Skill Gap

With the rapid evolution of data technologies, there’s a persistent challenge in having skilled professionals who understand the nuances of various tools and platforms.

Robust data infrastructure is non-negotiable in the contemporary business landscape. As the demand for real-time insights, data-driven decision-making, and advanced analytics grows, the infrastructure supporting these operations must be equally resilient, scalable, and future-ready. It’s not just about housing data; it’s about ensuring that data, in all its forms and volumes, can be harnessed effectively to fuel innovation and growth.

Algorithms at the Heart of Predictive Analysis

The power of predictive analysis lies in its ability to transform raw data into valuable foresight, helping organizations anticipate and act on future outcomes. These insights don’t magically appear. They are the result of intricate algorithms that sift through data, learning patterns, and making predictions. This chapter delves into the algorithms propelling predictive analysis, shining a light on the mathematical and computational machinery driving this exciting realm of data science.

Understanding Predictive Algorithms

Predictive algorithms can be viewed as mathematical models designed to recognize patterns. Using historical data as their training ground, these algorithms learn the nuances of these patterns and apply this knowledge to make predictions about future, unseen data.

Supervised Learning

Supervised Learning is the most common approach for predictive analysis where the algorithm is trained on a labeled dataset. It “learns” from this data and then makes predictions based on its learning.

Feedback Loop

For continued accuracy, predictions made by the algorithm are often fed back into the system to refine and improve subsequent predictions.

Common Predictive Algorithms

While the world of predictive analysis is vast, some algorithms have become the gold standard due to their effectiveness and versatility.

Linear Regression

A statistical method that predicts a continuous value based on the linear relationship between independent and dependent variables.

Decision Trees

A flowchart-like structure where each node represents a feature, each branch a decision rule, and each leaf a prediction.

Random Forest

An ensemble method that creates a ‘forest’ of decision trees and outputs the mode of the classes (classification) or mean prediction (regression) of individual trees.

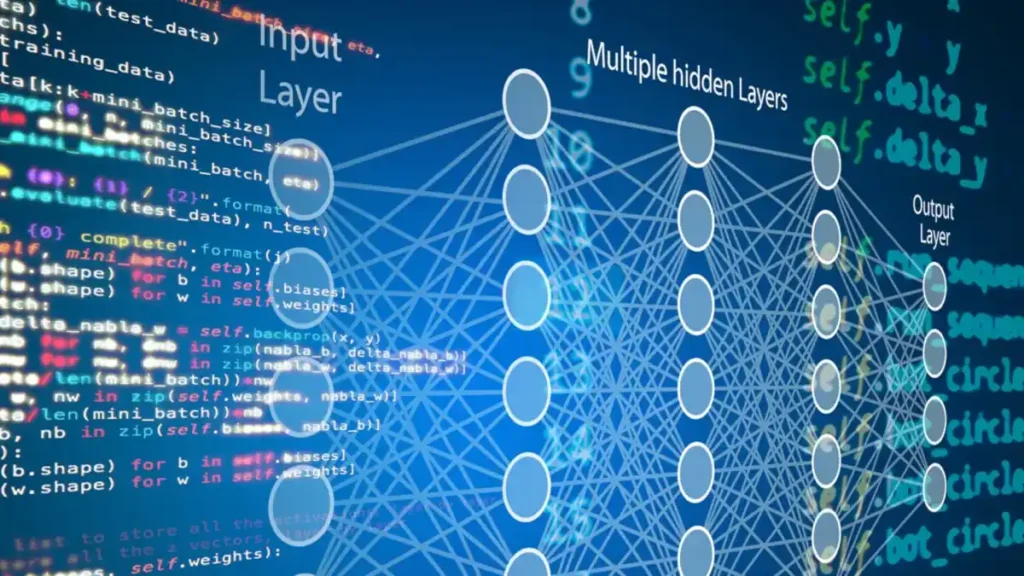

Neural Networks

Inspired by the human brain, it consists of interconnected nodes or ‘neurons’ and can model complex, non-linear relationships.

Support Vector Machines

Used for classification and regression, SVMs find a hyperplane that best divides a dataset into classes.

The Role of Data in Predictive Analysis

Predictive algorithms, while powerful, are only as good as the data they’re trained on.

Quality Over Quantity

Having vast amounts of data is less crucial than having relevant and accurate data. Garbage in results in garbage out.

Feature Engineering

The process of selecting and transforming variables in your data to improve the predictive power of the algorithm.

Bias and Fairness

It’s essential to ensure that the data used does not introduce biases, leading to unfair or discriminatory predictions.

Evaluating and Fine-tuning Predictive Models

An algorithm’s predictive prowess must be continually assessed and fine-tuned.

Training and Testing Data

Data is typically split, with a portion used for training and the rest for testing the algorithm’s predictions.

Cross-Validation

A technique to assess how the results of a predictive model will generalize to an independent dataset.

Metrics

Various metrics, such as Mean Absolute Error (for regression) or Accuracy and F1 Score (for classification), can be used to gauge performance.

Predictive analysis stands at the intersection of data, technology, and business decisions. By leveraging powerful algorithms, organizations can glean forward-looking insights, allowing them to strategize better, minimize risks, and grasp opportunities. As the mathematical heartbeats of this domain, understanding and choosing the right algorithms is imperative. It’s not merely about predicting the future—it’s about shaping it.

How Data Science is Revolutionizing Industries

The transformative power of data science is not confined to academic papers or high-tech labs; it’s creating waves of change across various industries. By harnessing vast amounts of data, businesses are not only optimizing their current processes but also pioneering novel approaches to age-old challenges. This chapter showcases how the tools and techniques of data science are radically reshaping multiple industries, heralding a new age of innovation and efficiency.

Healthcare: Precision Medicine and Predictive Care

Personalized Treatments

Data science allows for the analysis of genetic information, leading to treatments tailored for individual genetic makeups.

Disease Prediction

By analyzing patient records and lifestyles, predictive models can forecast disease outbreaks and individual health risks.

Medical Imaging

Advanced algorithms can now assist radiologists in detecting anomalies in X-rays, MRIs, and CT scans with increased accuracy.

Finance: Risk Management and Automated Trading

1. Credit Scoring

By analyzing transaction history, social media activities, and other alternative data, financial institutions can more accurately assess creditworthiness.

2. Algorithmic Trading

Data-driven models enable high-frequency trading strategies, outpacing human traders in speed and precision.

3. Fraud Detection

Machine learning models continually monitor transaction patterns to detect and prevent fraudulent activities in real time.

Retail and E-Commerce: Enhanced Customer Experiences

1. Recommendation Engines

Using past purchase history and browsing behavior, businesses can suggest products, increasing sales and enhancing user experience.

2. Inventory Management

Predictive analysis helps retailers forecast demand, reducing stockouts or overstock situations.

3. Sentiment Analysis

By analyzing customer reviews and social media feedback, businesses can gain insights into product performance and customer preferences.

Transportation and Logistics: Smart Mobility Solutions

1. Route Optimization

Advanced algorithms can predict traffic patterns and suggest optimal routes, reducing delivery times and fuel consumption.

2. Predictive Maintenance

Data-driven insights help predict when parts are likely to fail, reducing downtime and improving safety.

3. Autonomous Vehicles

Leveraging vast amounts of data from sensors, cars are inching closer to full autonomy, promising safer and more efficient roads.

Energy: Towards Sustainable Solutions

1. Smart Grids

Real-time data analysis helps balance and distribute energy supply efficiently across grids, accommodating renewable energy sources.

2. Predictive Equipment Failure

Sensors and predictive models reduce unexpected downtimes by forecasting equipment failures.

3. Optimized Energy Consumption

Data analytics in buildings and factories ensure optimal energy use, reducing costs and carbon footprints.

Agriculture: Data-driven Farming

1. Precision Agriculture

Using data from satellites, drones, and sensors, farmers can optimize irrigation, fertilization, and pest control.

2. Crop Yield Prediction

Predictive models assess weather patterns, soil quality, and historical yield data to forecast crop outputs.

3. Supply Chain Transparency

Blockchain and data analytics provide insights into the agricultural supply chain, ensuring food safety and sustainable practices.

Across industries, the story is much the same: data science is ushering in an era of profound change. Whether it’s through optimized processes, innovative products, or entirely new business models, the blend of data and algorithmic prowess is setting the stage for a brighter, more efficient future. As these revolutions unfold, industries not only evolve but also interlink through data, offering boundless opportunities for innovation and growth.

Predicting Trends and Technological Advancements

In our rapidly evolving digital age, staying a step ahead is imperative for both businesses and individuals. This requires not just understanding the present but also anticipating the future. Through data science, we are now better equipped than ever to predict technological trends and advancements, setting the stage for proactive innovation and strategy. This chapter dives deep into how the analytical prowess of data science is empowering us to forecast the trajectory of technology and the overarching trends that accompany it.

From Reactive to Proactive Innovation

1. Understanding Change Velocity

Data science provides tools to measure how quickly technology domains are evolving, allowing industries to match or exceed that pace.

2. Identifying Inflection Points

By analyzing data, it’s possible to pinpoint moments when steady technological trends might undergo rapid acceleration or change direction entirely.

Harnessing Big Data for Predictive Analysis

1. Mining Social Media

By analyzing the sentiments and trends on platforms like Twitter, Facebook, and LinkedIn, it’s feasible to spot budding technological trends.

2. Academic and Research Insights

Scrutinizing publications, patent filings, and academic research can offer glimpses into future technological breakthroughs.

Future Tech: What Data Tells Us

1. Quantum Computing

Data suggests an increasing investment in quantum research, hinting at breakthroughs that could redefine computational capabilities. The age of Quantum Computing is near!

2. Augmented Reality (AR) and Virtual Reality (VR)

With the growth of immersive technologies, data predicts an expansion of AR and VR into education, healthcare, and entertainment sectors.

3. Biotechnology

Data indicates that areas like gene editing, personalized medicine, and bioinformatics are poised for significant advancements.

Implications of Predicted Trends on Industries

1. Job Market Evolution

Anticipated technological advancements might reshape job roles, requiring new skills and rendering others obsolete.

2. Business Model Innovations

Predicted trends could drive companies to rethink their value proposition, delivery mechanisms, and customer engagement strategies.

3. Regulatory Challenges

Rapid tech advancements might outpace current legal and ethical frameworks, demanding agile and forward-thinking governance.

Preparing for the Unpredictable

1. Embracing Continuous Learning

With technology’s ever-changing landscape, the only constant will be the need for upskilling and reskilling.

2. Building Adaptive Infrastructures

For businesses, flexibility in IT infrastructure, operations, and strategy will be paramount to navigate unforeseen technological shifts.

3. Ethical Considerations

As we predict and shape the future, it’s vital to consider the ethical implications of our advancements, ensuring that innovation benefits humanity at large.

Predicting the future has always been a mix of science, art, and sometimes sheer luck. But with data science in our arsenal, the scales are tipping towards science. By diligently analyzing the past and the present, we can glean invaluable insights into the future, allowing businesses, policymakers, and individuals to adapt, innovate, and thrive. While predictions may never be foolproof, in the world of data science, they are increasingly educated, refined, and actionable.

Carving a Niche in the Data World

The universe of data science is expansive, and while a holistic grasp is essential, specialization often paves the path to mastery and differentiation. In a field crowded with data enthusiasts, it’s vital for budding data scientists to carve a niche for themselves, offering unique expertise or perspective. This chapter provides insights into how an individual or organization can stand out in the competitive realm of data science by tailoring a specialized approach.

Recognizing the Breadth and Depth of Data Science

1. The Wide Spectrum

Understanding the vast range of data science—ranging from data engineering to machine learning to business analytics—provides clarity about possible specialization areas.

2. Diving Deep

Depth often trumps breadth; mastering a single domain can be more impactful than a superficial understanding of many.

Strategies for Niche Specialization

1. Identify Industry Needs

Look for sectors that are data-rich but lack the expertise to extract insights. Sectors like agriculture, arts, or niche manufacturing could be ripe for specialized data solutions.

2. Pursue Personal Passion

Authenticity shines. If there’s a subject or field you’re genuinely passionate about, combining it with data science can lead to fulfilling and unique work.

3. Future Forecasting

As previously discussed, predicting technological trends can spotlight emerging areas in data science awaiting specialists.

Telling a Unique Data Story

1. Portfolio Building

A well-curated portfolio that demonstrates specialized projects, analyses, or insights can make a substantial impact on prospective employers or clients.

2. Narrative Skills

Beyond technical prowess, the ability to articulate findings, tell compelling data stories, and relate specialized insights to a broader audience is invaluable.

3. Networking

Engaging with professionals within your niche, attending seminars, or even organizing workshops can solidify your position as a thought leader in your specialization.

Challenges of Specialization

1. The Risk of Over-Specialization

While carving a niche is valuable, it’s essential to strike a balance. Becoming too narrow can risk obsolescence if the specialized domain loses relevance.

2. Continuous Upgradation

Every niche in data science will evolve. Continuous learning and adapting to the latest tools, techniques, and trends within the specialized area are paramount.

Examples of Successful Niche Data Scientists

1. Healthcare Imaging Experts

Specializing in medical image analysis, these professionals harness algorithms to detect and diagnose conditions, improving healthcare outcomes.

2. Sports Analysts

Merging a love for sports with data, these experts provide insights into player performances, game strategies, and fan engagement.

3. Art and Data Fusionists

At the intersection of art and analytics, these unique professionals utilize data to influence artistic creations or understand art trends.

In the vast, interconnected web of data science, carving a niche can be the distinguishing factor that propels an individual or organization to the forefront. By coupling deep knowledge in a specific domain with the broader principles of data science, one can offer unparalleled expertise and insights. Whether driven by market demands, personal passions, or foresight, a well-chosen specialization can lead to remarkable innovation, impact, and recognition in the data world.

Recommended Books, Courses, and Communities

Diving into the world of data science demands a plethora of resources to guide, teach, and inspire. For those hungry to expand their knowledge and network, a curated list of books, courses, and communities is indispensable. These resources cater to various learning styles and provide a holistic understanding of the discipline.

Must-Read Books

- “The Data Science Handbook” by Field Cady and Carl Shan: An all-encompassing guide covering core concepts and featuring interviews with leading data scientists.

- “Python for Data Analysis” by Wes McKinney: A primer on leveraging Python, one of data science’s most vital tools.

- “Storytelling with Data” by Cole Nussbaumer Knaflic: Focusing on the art of visualizing data and crafting compelling narratives.

Top Courses to Consider

- Coursera’s “Data Science Specialization”: Offered by Johns Hopkins University, it’s a comprehensive series of courses covering everything from data cleaning to advanced statistical techniques.

- EdX’s “Professional Certificate in Data Science”: By Harvard University, this course delves into R programming, machine learning, and advanced statistical concepts.

- Udacity’s “Data Analyst Nanodegree”: A project-based program focusing on data wrangling, visualization, and statistical tests.

Thriving Communities for Networking and Support

- Kaggle: Beyond just a platform for data science competitions, Kaggle boasts forums and datasets that foster learning and collaboration.

- Data Science Stack Exchange: A Q&A platform where data professionals discuss technical challenges and share insights.

- Meetup’s Data Science Groups: Local chapters exist globally, hosting events, workshops, and networking sessions for data enthusiasts.

Conclusion

In the intricate journey of understanding the world of data, our exploration of Data Science basics has only scratched the surface. This discipline, though rooted in mathematical and computational concepts, is so much more—it’s the nexus of industry knowledge, technological innovation, and the profound capability to derive insights from a sea of information.

As you take the leap into deeper waters, let this primer on Data Science basics be your beacon, guiding you toward mastery and innovation in this boundless domain. Equip yourself with the right tools, continuously seek knowledge, and always remain curious. After all, every byte of data holds a story waiting to be discovered.