Step into a time machine and journey back to the 1980s – a pivotal decade in the history of networking. From the birth of the IBM PC to the rise of Ethernet and fiber optics, this era marked a revolution in how we connect and communicate.

Dive into our article to explore these groundbreaking moments that shaped the digital world we live in today.

Index

- Introduction to Networking in the 1980s

- The Rise of the IBM PC and PC-based LANs

- Ethernet and LAN Networking Developments

- The Advent of Fiber-Optic Cabling in Networking

- The Network File System (NFS)

- The Emergence and Impact of Token Ring Topology

- ZX Spectrum

- The 7 Layers OSI Abstraction Model

- AT&T Divestiture: Reshaping the WAN Landscape in 1984

- SS7: Revolutionizing Telephony in the 1980s

- The Introduction and Evolution of ISDN

- The first fiber-optic transatlantic undersea cable

- The SONET Standard

- ATM – Asynchronous Transfer Mode

- ARPANET and MILNET

- CERN and the HTTP

- The National Science Foundation NETwork

- Analog Cellular

- Microsoft’s MS-DOS

- Microsoft Windows

- RISC Processor and Apple Macintosh

- Conclusion: The Legacy of 1980s Networking Advances

- References

1. Introduction to Networking in the 1980s

In the 1980s, the landscape of computing underwent a significant shift. The era witnessed the growth of client/server LAN architectures, which marked a move away from the previously dominant mainframe computing environments. This transition was driven by the increasing need for more decentralized and flexible computing solutions, particularly in business and corporate settings. The client/server model offered greater scalability and efficiency, enabling organizations to tailor their IT infrastructure to specific needs and paving the way for more personalized computing experiences.

2. The Rise of the IBM PC and PC-based LANs

The advent of the IBM PC in 1981 and the standardization and cloning of this system led to an explosion of PC-based LANs in businesses and corporations around the world, especially with the release of the IBM PC AT system in 1984.

The number of PCs in use grew from 2 million in 1981 to 65 million in 1991. Novell, which came on the scene in 1983, became a major player in file and print servers for LANs with its Novell NetWare NOS.

This era marked the beginning of widespread network connectivity in the business world, laying the groundwork for the modern networked environment.

3. Ethernet and LAN Networking Developments

However, the biggest development in the area of LAN networking in the 1980s was the evolution and standardization of Ethernet.

While the DIX consortium worked on standard Ethernet in the late 1970s, the IEEE began its Project 802 initiative, which aimed to develop a single, unified standard for all LANs. When it became clear that this was impossible, 802 was divided up into a number of working groups, with 802.3 focusing on Ethernet, 802.4 on Token Bus, and 802.5 on Token Ring technologies and standards.

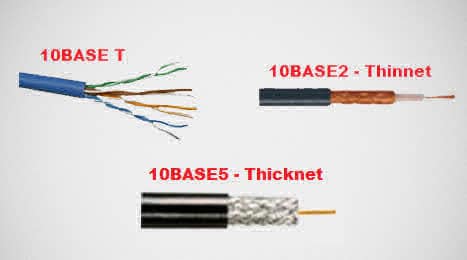

The work of the 802.3 group culminated in 1983 with the release of the IEEE 802.3 10Base5 Ethernet standard, which was called thicknet because it used thick coaxial cable and which was virtually identical to the work already done by DIX. In 1985, this standard was extended as 10Base2 to include thin coaxial cable, commonly called thinnet.

Throughout most of the 1980s, coaxial cable was the primary form of premise cabling in Ethernet implementations. However, a company called SynOptics Communications developed a product called LattisNet for transmitting 10-Mbps Ethernet over twisted-pair wiring using a star-wired topology connected to a central hub or repeater. This wiring was cheaper than coaxial cable and was similar to the wiring used in residential and business telephone wiring systems.

LattisNet was such a commercial success that the 802.3 committee approved a new standard 10BaseT for Ethernet over twisted-pair wiring in 1990. 10BaseT soon superseded the coaxial forms of Ethernet because of its ease of installation and because it could be installed in a hierarchical star-wired topology that was a good match for the architectural topology of multistory buildings.

4. The Advent of Fiber-Optic Cabling in Networking

In other Ethernet developments, fiber-optic cabling, which was first developed in the early 1970s by Corning, found its first commercial networking application in Ethernet networking in 1984.

(The technology itself was standardized as 10BaseFL in the early 1990s.)

Ethernet bridges became available in 1984 from DEC and were used both to connect separate Ethernet LANs to make large networks and to reduce traffic bottlenecks on overloaded networks by splitting them into separate segments.

Routers could be used for similar purposes, but bridges generally offered better price and performance during the 1980s, as well as reduced complexity. Again, market developments preceded standards as the IEEE 802.1D Bridge Standard, which was initiated in 1987, was not standardized until 1990.

5. The Network File System (NFS)

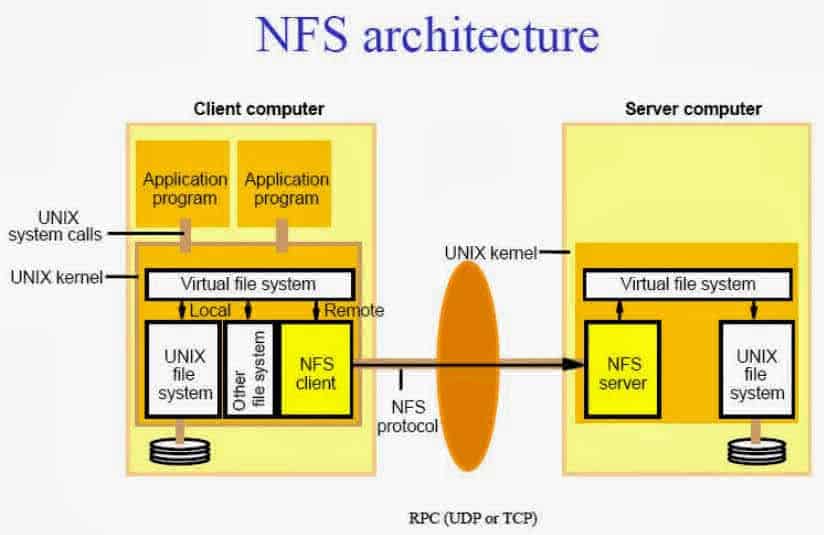

The development of the Network File System (NFS) by Sun Microsystems in 1985 resulted in a proliferation of diskless UNIX workstations with built-in Ethernet interfaces that also drove the demand for Ethernet and accelerated the deployment of bridging technologies for segmenting LANs.

Also around 1985, increasing numbers of UNIX machines and LANs were connected to ARPANET, which until that time had been mainly a network of mainframe and minicomputer systems. The first UNIX implementation of TCP/IP came in v4.2 of Berkeley’s BSD UNIX, from which other vendors such as Sun Microsystems quickly ported their versions of TCP/IP. Although PC-based LANs became popular in business and corporate settings during the 1980s, UNIX continued to dominate in academic and professional high-end computing environments as the mainframe environment declined.

6. The Emergence and Impact of Token Ring Topology

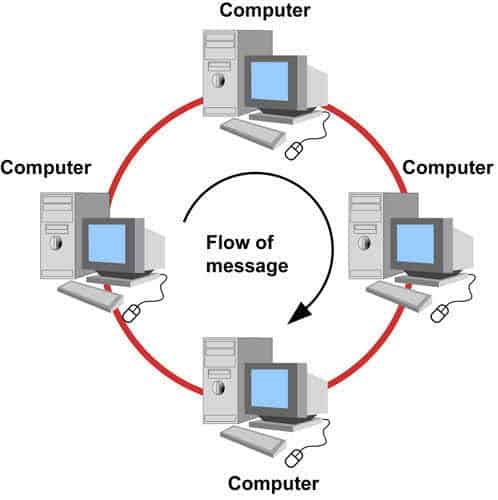

IBM introduced its Token Ring networking technology in 1985 as an alternative LAN technology to Ethernet. IBM had submitted its technology to the IEEE in 1982 and it was standardized by the 802.5 committee in 1984.

IBM soon supported the integration of Token Ring with its existing SNA networking services and protocols for IBM mainframe computing environments.

The initial Token Ring specifications delivered data at 1 Mbps and 4 Mbps, but it dropped the 1-Mbps version in 1989 when it introduced a newer 16-Mbps version.

Interestingly, no formal IEEE specification exists for 16-Mbps Token Ring’s vendors simply adopted IBM’s technology for the product. Since then, advances in the technology have included high-speed 100-Mbps Token Ring and Token Ring switching technologies that support virtual LANs (VLANs). Nevertheless, Ethernet remains far more widely deployed than Token Ring.

Read the article about Topology.

Also in the field of local area networking, the American National Standards Institute (ANSI) began standardizing the specifications for Fiber Distributed Data Interface (FDDI) in 1982. FDDI was designed to be a high-speed (100 Mbps) fiber-optic networking technology for LAN backbones on campuses and industrial parks. The final FDDI specification was completed in 1988, and deployment in campus LAN backbones grew during the late 1980s and the early 1990s.

7. ZX Spectrum

On April 1982, it was released in the UK the ZX Spectrum microcomputer. The Spectrum was among the first home computers in the United Kingdom aimed at a mainstream audience, and it thus had a similar significance to the Commodore 64 in the US and the Thomson MO5 in France. The introduction of the ZX Spectrum led to a boom in companies producing software and hardware for the machine, the effects of which are still seen.

8. The 7 Layers OSI Abstraction Model

In 1983, the ISO developed an abstract seven-layer model for networking called the Open Systems Interconnection (OSI) reference model. Although some commercial networking products were developed based on OSI protocols, the standard never really took off, primarily because of the predominance of TCP/IP. Other standards from the ISO and ITU that emerged in the 1980s included the X.400 electronic messaging standards and the X.500 directory recommendations.

9. AT&T Divestiture: Reshaping the WAN Landscape in 1984

A major event in the telecommunications and WAN field in 1984 was the divestiture of AT&T as the result of a seven-year antitrust suit brought against AT&T by the U.S. Justice Department. AT&T’s 22 Bell operating companies were formed into seven new RBOCs. This meant the end of the Bell system, but the RBOCs soon formed Bellcore as a telecommunications research establishment to replace the defunct Bell Laboratories. The United States was divided into Local Access and Transport Areas (LATAs), with intra-LATA communication handled by local exchange carriers (the Bell Operating Companies or BOCs) and inter-LATA communication handled by inter-exchange carriers (IXCs) such as AT&T, MCI, and Sprint.

The result of the breakup for wide area networking was increased competition, which led to new technologies and lower costs. One of the first effects was the offering of T1 services to subscribers in 1984. Until then, this technology had been used only for backbone circuits for long-distance communication. New hardware devices were offered to take advantage of the increased bandwidth, especially high-speed T1 multiplexers, or muxes, that could combine voice and data into a single communication stream. 1984 also saw the development of digital Private Branch Exchange (PBX) systems by AT&T, bringing new levels of power and flexibility to corporate subscribers.

10. SS7: Revolutionizing Telephony in the 1980s

The deployment of the Signaling System #7 (SS7) in the 1980s, first in Sweden and then in the United States, revolutionized the Public Switched Telephone Network (PSTN). SS7, a digital signaling system, introduced a range of advanced telephony services. It enabled features like caller ID, call blocking, and automatic callback, enhancing user experience and offering new levels of functionality in telecommunications. This evolution marked a significant leap in how telephone networks operated, paving the way for more efficient and sophisticated communication services.

11. The Introduction and Evolution of ISDN

The first trials of ISDN, a fully digital telephony technology that runs on existing copper local loop lines, began in Japan in 1983 and in the United States in 1987. (All major metropolitan areas in the United States have since been upgraded to make ISDN available to those who want it, but ISDN has not caught on as a WAN technology as much as it has in Europe.)

12. The first fiber-optic transatlantic undersea cable

In the 1980s, fiber-optic cabling emerged as a significant advancement in networking and telecommunications. This period marked the beginning of fiber optics replacing traditional cabling systems, offering far superior data transmission capabilities. A pivotal moment was in 1988, with the laying of the first fiber-optic transatlantic undersea cable. This achievement dramatically increased the capacity of the transatlantic communication system, signifying a monumental leap in global communication efficiency and bandwidth. Fiber optics thus became a cornerstone technology, shaping the future of high-speed data transfer and international connectivity.

13. The SONET Standard

The 1980s also saw the standardization of SONET technology, a high-speed physical layer (PHY) fiber-optic networking technology developed from time-division multiplexing (TDM) digital telephone system technologies. Before the divestiture of AT&T in 1984, local telephone companies had to interface their own TDM-based digital telephone systems with proprietary TDM schemes of long-distance carriers, and incompatibilities created many problems.

This provided the impetus for creating the SONET standard, which was finalized in 1989 through a series of CCITT (anglicized as International Telegraph and Telephone Consultative Committee) standards called G.707, G.608, and G.709. By the mid-1990s, almost all long-distance telephone traffic in the United States used SONET on trunk lines as the physical interface.

14. ATM – Asynchronous Transfer Mode

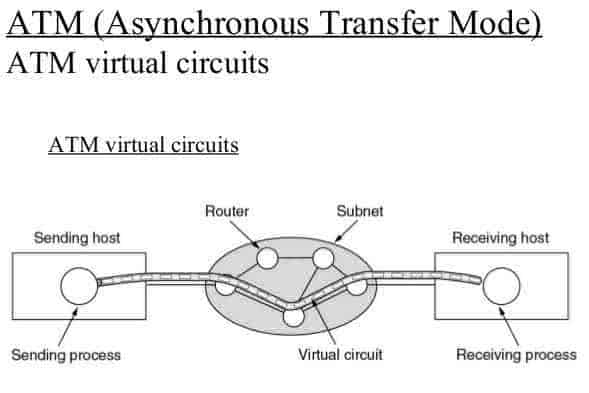

The 1980s brought the first test implementations of Asynchronous Transfer Mode (ATM) high-speed cell-switching technologies, which could use SONET as the physical interface. Many concepts basic to ATM were developed in the early 1980s at the France-Telecom laboratory in Lannion, France, particularly the PRELUDE project, which demonstrated the feasibility of end-to-end ATM networks running at 62 Mbps. The 53-byte ATM cell format was standardized by the CCITT in 1988, and the new technology was given a further push with the creation of the ATM Forum in 1991.

Since then, use of ATM has grown significantly in telecommunications provider networks and has become a high-speed backbone technology in many enterprise-level networks around the world. However, the vision of ATM on users desktops has not been realized because of the emergence of cheaper Fast Ethernet and Gigabit Ethernet LAN technologies, and because of the complexity of ATM itself.

Read the ATM article

The convergence of voice, data, and broadcast information remained a distant vision throughout the 1980s and was even set back because of the proliferation of networking technologies, the competition between cable and broadcast television, and the slow adoption of residential ISDN. New services did appear, however, especially in the area of commercial online services such as America Online (AOL), CompuServe, and Prodigy, which offered consumers e-mail, bulletin board systems (BBS’s), and other services.

15. ARPANET and MILNET

A significant milestone in the development of the Internet occurred in 1982 when the networking protocol of ARPANET was switched from NCP to TCP/IP. On January 1, 1983, NCP was turned off permanently; anyone who hadn’t migrated to TCP/IP was out of luck. ARPANET, which connected several hundred systems, was split into two parts, ARPANET and MILNET.

TCP/IP Adoption

The first international use of TCP/IP took place in 1984 at CERN, a physics research center in Geneva, Switzerland. TCP/IP was designed to provide a way of networking different computing architectures in heterogeneous networking environments. Such a protocol was badly needed because of the proliferation of vendor-specific networking architectures in the preceding decade, including «homegrown» solutions developed at many government and educational institutions.

TCP/IP made it possible to connect diverse architectures such as UNIX workstations, VMS minicomputers, and CRAY supercomputers into a single operational network. TCP/IP soon superseded proprietary protocols such as Xerox Network Systems (XNS), ChaosNet, and DECnet. It has since become the de facto standard for internetworking all types of computing systems.

16. CERN and the HTTP

CERN was primarily a research center for high-energy particle physics, but it became an early European pioneer of TCP/IP and by 1990 was the largest subnetwork of the Internet in Europe. In 1989, a CERN researcher named Timothy Berners-Lee developed the Hypertext Transfer Protocol (HTTP) that formed the basis of the World Wide Web (WWW).

And all of this developed as a sidebar to the real research that was being done at CERN’s slamming together protons and electrons at high speeds to see what fragments appear!

Read the HTTP (Hypertext Transfer Protocol) article

Also important to the development of Internet technologies and protocols was the introduction of the Domain Name System (DNS) in 1984. At that time, ARPANET had more than 1000 nodes, and trying to remember them by their numerical IP address was a headache. NNTP was developed in 1987, and Internet Relay Chant (IRC) was developed in 1988.

Other systems paralleling ARPANET were developed in the early 1980s, including the research-oriented Computer Science NETwork (CSNET), and the Because It’s Time NETwork (BITNET), which connected IBM mainframe computers throughout the educational community and provided e-mail services. Gateways were set up in 1983 to connect CSNET to ARPANET, and BITNET was similarly connected to ARPANET. In 1989, BITNET and CSNET merged into the Corporation for Research and Educational Networking (CREN).

17. The National Science Foundation NETwork

In 1986, the National Science Foundation NETwork (NSFNET) was created. NSFNET networked together the five national supercomputing centers using dedicated 56-Kbps lines. The connection was soon seen as inadequate and was upgraded to 1.544-Mbps T1 lines in 1988. In 1987, NSF and Merit Networks agreed to jointly manage the NSFNET, which had effectively become the backbone of the emerging Internet. By 1989, the Internet had grown to more than 100,000 hosts, and the Internet Engineering Task Force (IETF) was officially created to administer its development. In 1990, NSFNET officially replaced the aging ARPANET and the modern Internet was born, with more than 20 countries connected.

Cisco Systems was one of the first companies in the 1980s to develop and market routers for Internet Protocol (IP) internetworks, a business that today is worth billions of dollars and is a foundation of the Internet. Hewlett-Packard was Cisco’s first customer for its routers, which were originally called gateways.

18. Analog Cellular

In wireless telecommunications, analog cellular was implemented in Norway and Sweden in 1981. Systems were soon rolled out in France, Germany, and the United Kingdom. The first U.S. commercial cellular phone system, which was named the Advanced Mobile Phone Service (AMPS) and operated in the 800-MHz frequency band, was introduced in 1983. By 1987, the United States had more than 1 million AMPS cellular subscribers, and higher-capacity digital cellular phone technologies were being developed. The Telecommunications Industry Association (TIA) soon developed specifications and standards for digital cellular communication technologies.

19. Microsoft’s MS-DOS

A landmark event that was largely responsible for the phenomenal growth in the PC industry (and hence the growth of the client/server model and local area networking) was the release of the first version of Microsoft’s text-based, 16-bit MS-DOS operating system in 1981. Microsoft, which had become a privately held corporation with Bill Gates as president and chairman of the board and Paul Allen as executive vice president, licensed MS-DOS 1 to IBM for its PC. MS-DOS continued to evolve and grow in power and usability until its final version, MS-DOS 6.22, which was released in 1993. One year after the first version of MS-DOS was released in 1981, Microsoft had its own fully functional corporate network, the Microsoft Local Area Network (MILAN), which linked a DEC 206, two PDP-11/70s, a VAX 11/250, and a number of MC68000 machines running XENIX. This setup was typical of heterogeneous computer networks in the early 1980s.

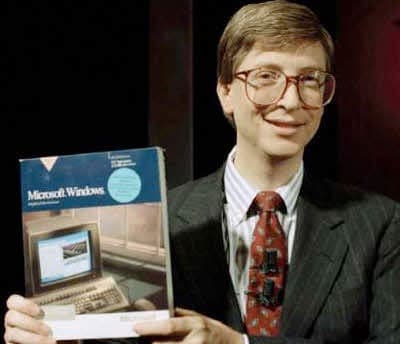

20. Microsoft Windows

In 1983, Microsoft unveiled its strategy to develop a new operating system called Microsoft Windows with a graphical user interface (GUI). Version 1 of Windows, which shipped in 1985, used a system of tiled windows and could work with several applications simultaneously by switching between them. Version 2 was released in 1987 and supported overlapping windows and support for expanded memory.

Microsoft launched its SQL Server relational database server software for LANs in 1988. In its current version 7, SQL Server is an enterprise-class application that competes with other major database platforms such as Oracle and DB2. IBM and Microsoft jointly released their OS/2 operating system in 1987 and released OS/2 1.1 with Presentation Manager a year later.

Read the Microsft Windows article

21. RISC Processor and Apple Macintosh

In miscellaneous developments, IBM researchers developed the Reduced Instruction Set Computing (RISC) processor architecture in 1980. Apple Computer introduced its Macintosh computing platform in 1984 (the successor of its Lisa system), which introduced a windows-based GUI that became the precursor to Microsoft Windows.

Apple also introduced the 3.5-inch floppy disk in 1984. CD-ROM technology was developed by Sony and Philips in 1985. (Recordable CD-R technologies were developed in 1991.) IBM released its AS/400 midrange computing system in 1988, which continues to be popular to this day.

22. Conclusion

In conclusion, the 1980s marked a transformative era in networking history, characterized by groundbreaking developments such as the divestiture of AT&T, the rise of the IBM PC, and the introduction of fiber-optic cabling. These advancements set the stage for the further evolution of networking in the 1990s, a period that promises even more technological milestones. To continue exploring the fascinating journey of networking, delve into the developments of the 1990s in “Networking History 1990”, and for a deeper understanding of the roots of these advancements, revisit “Networking History in the 1970s”.

23. References

- “A Brief History of the Internet & Related Networks“, by Vint Cerf, Internet Society.

- “Computer Network, Architectures and Protocols”, by Carl A. Sunshine

- “Encyclopedia of Networking”, by Werner Feibel, The Network Press.

- “Computer Networking with Internet Protocols and Technology”, by William Stallings, Pearson Education 2004.

- “Computer Networking: A Top-Down Approach“, by James F. Kurose and Ross W. Keith, Pearson Education 2005.